为让使用兆芯平台服务器的用户能够更快捷的构建IaaS系统,充分发挥兆芯平台的性能,特编写本指南,供用户参考。本指南并不依赖特定产品型号,重在指导用户在兆芯平台服务器上的调试方法,并且会随着评估测试的深入和成熟度增加逐步完善。

一、系统介绍

1.硬件环境:

测试用IaaS系统规模为46个节点,均使用兆芯平台双路服务器构建。其中OpenStack节点40个,Ceph分布式存储系统节点6个,详见下表。

|

节点类型 |

节点配置 |

数量 |

|

管理节点 |

128G DDR4内存+120G SSD+千兆网络x2 |

3 |

|

网络节点 |

128G DDR4内存+120G SSD+千兆网络x3 |

1 |

|

块存储节点 |

128G DDR4内存+120G SSD+千兆网络x2 |

1 |

|

对象存储节点 |

128G DDR4内存+120G SSD+4T HDDx3+千兆网络x2 |

1 |

|

计算节点 |

128G DDR4内存+120G SSD+千兆网络x2 |

33 |

|

监控节点 |

128G DDR4内存+120G SSD+千兆网络x2 |

1 |

|

Ceph Mon节点 |

128G DDR4内存+120G SSD+万兆网络x2 |

3 |

|

Ceph OSD节点 |

128G DDR4内存+250G SSD+500G SSDx3+2T HDDx12+万兆网络x4 |

3 |

|

Rally Server |

Dell E3-1220v3服务器 |

1 |

2.软件环境:

|

软件 |

版本 |

备注 |

|

Host OS |

CentOS7.6 |

安装ZX Patch v3.0.9.4 |

|

Python |

v2.7.5 |

|

|

Docker |

v19.03.8 |

|

|

OpenStack |

Sterin |

基于容器部署 |

|

Mariadb |

v10.3.10 |

多主Golare Cluster |

|

SQLAlchemy |

v0.12.0 |

python module |

|

RabbitMQ |

v3.8.14 |

搭配Erlang v22.3.4.21 |

|

Haproxy |

v1.5.18 |

|

|

KeepAlived |

v1.3.5 |

|

|

Ceph |

v14.2.19 |

|

|

Rally |

v2.0 |

并发测试工具 |

|

CosBench |

v0.4.2 |

对象存储性能测试工具 |

|

TestPerf |

v2.16.0 |

消息队列性能测试工具 |

3.存储方案

各OpenStack节点的本地磁盘只做系统使用。提供给用户的存储资源统一使用Ceph作为后端。即nova、glance、cinder-volume和cinder-backup以及manila全部使用Ceph做后端存储。基于兆芯平台的Ceph集群部署和调优方法请参见官网已发布的《基于兆芯平台部署Ceph分布式存储系统的最佳实践》。

部署了Swift对象存储服务,使用本地磁盘,但没有配置给其他组件使用。其性能也不包含本文讨论范围之内。

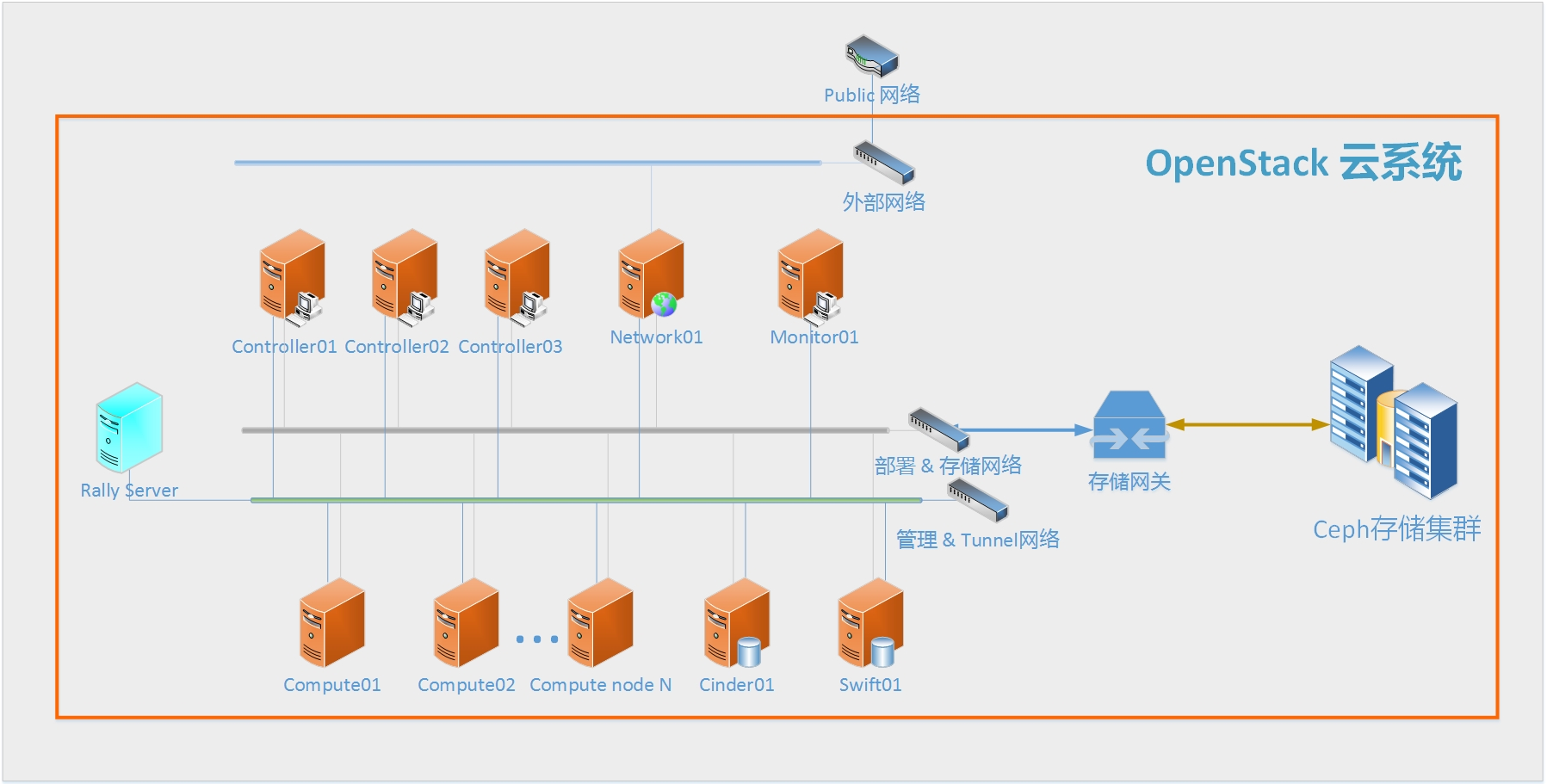

4.组网方案

根据兆芯平台服务器板载网卡配置情况、计划业务规模和现有网络环境等条件,规划集群网络如下图所示。将逻辑上的五类网络收缩成三个物理网络实施部署,即管理和Tunnel网络公用一个物理网络、部署和存储网络公用一个物理网络以及外网。

- 部署网络:用于PXE boot及安装软件时访问本地软件源镜像;

- 管理网络:用于各节点之间通过API访问以及SSH访问;

- Tunnel网络:用于各计算节上虚拟机互通以及和网络节点的连接,主要承载业务的东西流量;

- 存储网络:用于访问统一存储后端;

图1 网络拓扑

二、调优策略和手段

1.性能指标

由于本文主要涉及IaaS平台性能,并不包含虚拟主机的性能,因此测试和调优工作主要在针对OpenStack关键组件的性能测试。我们关注的性能指标有:单个请求的完成时间、批量请求的95%完成时间和批量请求的尾端延迟。

2.测试方法

获得优化参数的流程主要是测试、分析、调优、再测试。

- 测试工具

对于OpenStack组件我们使用Rally来进行批量测试,可以使用Rally中包含的测试用例也可以根据需要自定义测试用例。Rally的测试报告中会有详细的耗时统计。本测试批量请求的测试规模是200并发,被测计算节点十个,使用的Guest OS是Cirros。

对于单个请求的测试还可以使用OSprofiler。Openstack中的请求多数都需要经过多个组件处理才可以完成,该工具主要用途是协助分析请求的处理过程,在并发测试前找出可能的耗时点,从而提前优化。

对于RabbitMQ我们采用了TestPerf工具,并设计了几种典型用例来进行性能测试。重点关注消息队列的吞吐量和消息投递的延迟时间。

- 分析方法

主要从测试结果和日志入手,优先解决日志中的告警和报错事件,例如瞬时高并发请求导致的服务或数据库连接超时、数据库查询失败、资源不足等问题。

其次是基于对源代码的理解通过添加时间戳获取测试中的业务流或数据流关键节点的耗时数据,再逐个分析高耗时点的耗时原因。

还有一种常用方法是在标准平台上做对标测试。在问题收敛到一定程度后仍无法解释性能问题的原因时或者性能问题的原因可能是和硬件架构或体系结构有关时,使用该方法来验证。

- 优化手段

对于OpenStack控制层面的优化方向主要有三个:提高并发成功率、缩短平均完成时间和降低尾部延迟。

使用OpenStack默认配置做并发测试会发现,根据具体的被测功能不同,200并发的成功率差别很大,通常被测功能涉及组件越多且并发数量越大成功率越低。提高成功率的方法主要靠提升硬件性能和调整各组件的配置参数。在兆芯平台上,硬件性能的提升除了提高关键硬件(内存和网卡)的性能外更重要的有两点:一是服务器系统要打上兆芯BSP Patch;二是系统部署方案开发阶段就要根据兆芯平台的NUMA 拓扑结构来考虑合理的亲和设置,尽量避免产生跨NUMA访问,若无法避免跨NUMA访问,则尽量使用相邻的node,避免跨Socket访问。而在组件配置上则需要根据对请求的处理路径的跟踪,找到耗时较长的功能。由于随并发量增加经常会导致组件处理的Retry和Timeout,这些会进一步导致产生请求失败,在无法提升组件处理效率时应适当增加Retry次数或Timeout时间来避免请求直接失败。

为缩短平均完成时间,除了前面已提到增加硬件平台性能外,就要清楚的找到各种delay请求处理的具体阶段。该情况下可使用各种trace手段来梳理控制流和数据流,通过分析日志等方法统计各处理阶段的耗时。找到关键耗时功能后,可调优的手段有:

1、修改组件配置,增加处理线程数,充分利用多核性能;

2、组件性能依赖操作系统配置的,考虑调整系统配置来优化,相关配置优化方法请参考对应操作系统提供的调优文档以及兆芯官方提供的其他服务器产品相关调优文档;

3、组件对同一功能经常可以提供多种方式,修改配置,使用更高效的方式;

4、从社区查找对请求处理性能有提高的Patch,有些处理效率低的原因是实现方式本身就效率低,通过Patch可能有效提高处理能力。如果没有可用Patch,只能自行开发。

5、根据Python语言特性进行优化,避免线程阻塞;

6、优化组件部署方案,根据并发压力适当增加组件数量或节点数量,也可通过组件迁移均衡各节点的负载压力;

7、更新服务器OS 内核功能Patch,提供高内核处理能力;

8、对于通用软件,如消息队列,数据库和负载均衡等,还可以选择升级版本或选用性能更好的同类软件来实现性能的提高。

本文后续章节中介绍的推荐参数均根据测试成绩得出。

请注意,测试用例不同对各参数的值影响很大,比如测试200并发创建虚拟机时使用的虚拟机镜像从Cirros改为Ubuntu,那么很可能很多地方的超时时间和Retry次数都需要增加才能保证成功率100%。因此,本文中推荐值不适用于生产环境,仅用作调优参考。

三、OpenStack关键组件配置

1. 数据库

OpenStack系统中数据库是十分关键的服务。各组件都在数据库服务中有自己的数据库,用于保存服务、资源和用户等相关数据。数据库还被用作各种组件间协同的一种机制。因此,在处理各种请求的过程中或多或少都会涉及到数据库的读写操作。

在云平台的性能调优过程中需要重点观测数据库请求响应时间。对于运行时数据库请求响应时间过长问题通常是比较复杂的,涉及数据库服务器/cluster、代理服务器和客户端(即组件端),需要一一排查,查找问题源头并做对应调整。本节主要介绍服务器端和客户端的相关配置参数,代理服务器端参考后续章节。

1.1 Mariadb

测试用系统中以三个Mariadb节点构建了一个多主模式的Golare Cluster,前端通过haproxy实现主备高可用。数据库的调优方法可以参考《MySQL优化手册》,补充一个在OpenStack云系统中需要特别注意的参数。

- max_allowed_packet

该参数用于设置MariaDB 服务器端允许接收的最大数据包大小。有时候大的插入和更新操作会因max_allowed_packet 参数设置过小导致失败。

- 推荐配置:

默认值1024,单位Kbyte。在Open Stack的集群中,有超出默认值大小的包,需要适当增加大小。

修改mariadb的配置文件galera.cnf

|

[mysqld] max_allowed_packet = 64M |

重启mariadb服务生效。

1.2 oslo_db

各组件访问数据库是通过调用oslo_db来实现的,实际部署中我们配置oslo_db+SQLAlchemy作为数据库访问的客户端,因此,数据库客户端的相关配置参数和调优手段可以参考SQLAlchemy的官方文档。注意,SQLAlchemy的高版本会带来性能提升,但不可随意升级,需要考虑版本兼容。

- slave_connection

Stein版oslo_db支持配置slave_connection,并且已经有部分组件支持按读写分离方式访问数据库,例如nova,即写数据库操作使用connection,读数据库操作使用slave_connection操作。从而改善读操作的性能。

参考配置方法:

1、通过haproxy来为读写操作提供不同的转发入口,如写操作使用3306,转发模式配置为一主两备;读操作使用3307,转发方式配置为按最小连接数。

2、为支持读写分离的组件在其配置文件的【database】段中增加slave_connection配置。

注意:有些组件虽然可以配置slave_connection但其代码事件中实际上并没有在读数据库时调用slave_connection,需根据具体版本仔细确认。

- 连接池

和数据库建立连接是一个相对耗时的过程,因此,提供了连接池机制,连接池中的连接可以被重复使用,以提高效率。用户在调试过程中应关注各组件和数据库之间的连接情况,如组件的数据库请求响应时间和组件日志中数据库连接相关信息等。若发现数据库请求响应时间过长且经排查后怀疑是组件端在连接数据库上耗时过长时,可通过以下参数尝试优化。在本文所述测试过程中使用了默认参数设置,用户需根据运行时情况进行调优。连接池主要配置参数如下:

min_pool_size :连接池中已连接的SQL连接数不得小于该值,默认值是1。

max_pool_size :连接池中已连接的最大SQL连接数,默认值是5,设置0时无限制。

max_overflow :最大允许超出最大连接数的数量,默认值是50。

pool_timeout :从连接池里获取连接时如果无空闲的连接,且连接数已经到达了max_pool_size+max_overflow,那么要获取连接的进程会等待pool_timeout秒,默认值是30s,如果超过这个时间还没有得到连接将会抛出异常。如果出现该异常,可以考虑增加连接池的连接数。

2. 消息队列

2.1 Rabbitmq

测试用系统中以三节点构建了一个RabbitMQ Mirror Queue Cluster,所有节点均为disk节点。

RabbitMQ的主要性能问题是消息投递的延迟时间,但对于cluster的组织方式,其延迟时间主要消耗在镜像队列之间的数据一致性保证处理上,而这种处理过程十分复杂,调优难度很大。对于Cluster目前的调优手段有如下几种:

1、由于Rabbitmq基于erlang运行,而通过对比测试,高低版本性能差异较大,建议尽量使用高版本,如Rabbitmq使用3.8以上版本,erlang版本v22.3及以上(根据Rabbitmq版本具体选择)。

2、由于Cluster的队列镜像数量越多,每条消息处理时在一致性上的耗时越长,因此可以根据实际情况减少镜像队列数量。

3、若Rabbitmq是容器化安装,为避免CPU资源被抢占,可配置docker参数,分配给Rabbitmq更多的CPU时间。

推荐的调优参数如下:

- collect_statistics_interval

默认情况下,Rabbitmq Server默认以5s的间隔统计系统信息,周期内publish、 delivery message等速率信息会以此为周期统计。

- 推荐配置

增加该值能够减少Rabbitmq Server 收集大量的状态信息而导致CPU利用率增加,参数单位为ms。

编辑RabbitMQ的配置文件rabbitmq.conf

|

collect_statistics_interval = 30000 |

重启RabbitMQ服务生效。

- cpu_share

Docker 允许用户为每个容器设置一个数字,代表容器的 CPU share,默认情况下每个容器的 share 是 1024。要注意,这个 share 是相对的,本身并不能代表任何确定的意义。当主机上有多个容器运行时,每个容器占用的 CPU 时间比例为它的 share 在总额中的比例。只有在CPU资源紧张时,设定的资源比例才可以显现出来,如果CPU资源空闲,cpu_share值低的docker也能获取到比例外的CPU资源。

- 推荐配置

控制节点上部署openstack 各组件的api server以及rabbitmq server,当对rabbitmq做并发测试时,可以适当提高节点上rabbitmq docker的CPU share让其获得更多的CPU资源。如果条件满足的情况下,Rabbitmq Cluster 应该单独部署在服务器集群上,不和其他服务抢占CPU资源。单独部署的Rabbitmq Cluster有更高的并发能力。

配置方法:

docker update --cpu-shares 10240 rabbitmq-server

- ha-mode

Rabbitmq Cluster镜像队列可以配置镜像队列在多节点备份,每个队列包含一个master节点和多个slave节点。消费者消费的操作现在master节点上完成,之后再slave上进行相同的操作。生产者发布的消息会同步到所有的节点。其他的操作通过master中转,master将操作作用于slave。镜像队列的配置策略:

|

ha-mode |

ha-params |

说明 |

|

all |

集群中每个节点都有镜像队列 |

|

|

exactly |

count |

指定集群中镜像队列的个数 |

|

nodes |

node names |

在指定的节点列表中配置镜像队列 |

默认的ha-mode是all,三节点的集群镜像队列在3节点备份。定义策略的指令:

rabbimqctl set_policy –p vhost

pattern:正则匹配,定义的policy会根据正则表达式应用到相应的交换机或者队列上。

definition:配置策略的参数。

- 推荐配置

rabbitmqctl set_policy -p / ha-exactly '^' '{'ha-mode':'exactly', 'ha-params':2}'

集群两队列备份比三队列备份应对高并发的能力更强,前者支持的并发数是后者的1.75倍。

- CPU绑定

RabbitMQ 运行在erlang 虚拟机中。本文环境之中使用的erlang版本支持SMP,采用多调度器多队列的机制,即启动erlang虚拟机时,默认会根据系统逻辑CPU核数启动相同数量的调度器(可通过启动参数+S限制),每个调度器都会从各自的运行队列中获取运行进程。但由于OS的线程调度机制,erlang调度器线程会在各核之间迁移,这会导致Cache Miss增加,影响性能。可以通过参数+sbt 来设置调度器和逻辑核绑定。Erlang支持多种绑定策略,详见Erlang说明文档。

- 推荐配置

默认配置为db,按numa node轮流绑定,尽量使用到所有node。但由于调度器多任务队列之间存在balance机制,任务会在队列间迁移,因此,为了更好的利用cache,在本文测试环境下上推荐将+stb 配置为nnts,即调度器线程按numa node顺序进行绑定。

编辑RabbitMQ的配置文件rabbitmq-env.conf

|

RABBITMQ_SERVER_ADDITIONAL_ERL_ARGS='+sbt nnts' |

重启RabbitMQ 服务生效。

用户还可根据实际环境采取其他Erlang虚拟机调优方法配合使用(如启用Hipe等),但具体Erlang虚拟机的参数介绍和调优手段不在本文讨论范围。

- 心跳保持

Hearbeat用来检测通信的对端是否保活。基本原理是检测对应的socket链接上数据的收发是否正常,如果有一段时间没有收发数据,则向对端发送一个心跳检测包,如果一段时间内没有回应则认为心跳超时对端可能异常crash。在负载重时组件可能无法及时处理heartbeat消息而导致Rabbitmq Server没有在超时时间内收到心跳检测应答。Rabbitmq Server因组件超时未应答而关闭连接导致错误。适当增加组件和Rabbitmq Server的心跳超时时间以避免该错误。参考Cinder章节中相关介绍。

编辑RabbitMQ的配置文件rabbitmq.conf

|

heartbeat = 180 |

重启RabbitMQ服务生效。

2.2 oslo_messaging

各功能组件和Rabbitmq的连接都是调用oslo_messaging来实现的,该库中提供的如下几个参数可用于优化高负载时RPC处理性能。

rpc_conn_pool_size :RPC连接池的大小,默认值是30。

executor_thread_pool_size :执行RPC处理的线程或协程数量,默认值是64。

rpc_response_timeout :RPC调用响应超时时间,默认值是60s。

我们的测试中前两个参数使用了默认值,rpc_response_timeout根据各组件的实际处理能力做了适当增加。

3. Nova

3.1 nova-api

- Workers

nova-api是一个WSGI Server,亦可叫api server,其负责接收外部发来的REST请求。API Server启动时会根据配置文件建立一定数量的worker线程来接收请求,如果线程数量不足以满足并发量,就会出现排队,导致请求处理时间增加。因此,管理员可以根据实际的业务量来设置worker数量。对于独占一个节点的api server,workers通常可以设置到等同于CPU核数,但在多个api server同部署于一个节点时(如在控制节点上),物理CPU资源有限,就需要根据服务器处理能力和各api server的负载情况调整各自的workers数量。

对于容器方式部署的api server,还可以考虑配置容器使用的CPU和内存资源的numa亲和,尽量做到各容器的NUMA亲和分开,从而减少CPU资源抢占以及更好的利用cache资源。该方法对其他以容器方式部署的服务也同样适用。

在nova-api的配置文件中有几个配置项,如:osapi_compute_workers和metadata_workers,可以根据节点服务器能力和负载情况进行调整。

其他组件,如glance等都有自己的api server,与nova-api类似,可以通过增加workers数量来改善请求处理性能。

- Patch:热迁移性能

Nova Compute在执行live-migrate()时,子线程执行_live_migration_operation()函数和主线程执行_live_migration_monitor()函数都会访问Instance Fields。如果此前未曾访问过Instance.fields,则可能同时调用nova/objects/instance.py:class Instance. obj_load_attr()函数,utils.temporary_mutation()函数的重入会导致执行后Context.read_deleted赋值为“yes”。后续在_update_usage_from_instances()时会统计不必要统计的已经删除的instances,增加耗时。 Stein版本的OpenStack存在上述漏洞,后续的版本由于其他操作提前获取过Intance.fields,从而nova-compute不需要在live-migrate时调用obj_load_attr()。Bug提交信息见链接:https://bugs.launchpad.net/nova/+bug/1941819。可以通过补丁https://github.com/openstack/nova/commit/84db8b3f3d202a234221ed265ec00a7cf32999c9 在Nova API中提前获取Instance.fileds以避免该Bug。

补丁详情参见附件A1.1

3.2 Nova-scheduler & nova-conductor

这两个服务同样也有workers配置参数来控制处理线程的数量,可以根据实际业务量来调整,我们的测试中设置为默认值即可满足。Nova-conductor的workers默认值是CPU核数。Nova-scheduler的默认值是当使用filter-scheduler时是CPU核数,使用其他Scheduler时默认值是1。

3.3 nova-computer

- vcpu_pin_set

限定Guest可以使用compute node上的pCPUs的范围,给Host适当留下一些CPU以保证正常运作。解决因虚拟机高负载情况下争抢CPU资源导致Host性能不足的问题。

- 推荐配置

在每个numa node上都预留一个物理CPU核供Host使用。以两numa nodes 平台为例,可选择预留cpu0和cpu15供Host使用,配置方法如下:

编辑nova-compute的配置文件nova.conf

|

vcpu_pin_set = 1-14 |

重启nova_compute服务生效。

- reserved_host_memory_mb

该参数用于在计算节点上预留内存给Host系统使用,避免虚拟主机占用掉过多内存,导致Host系统上的任务无法正常运行。

- 推荐配置

设置值须根据实际内存总量、Host系统中运行的tasks以及预期虚拟机会占用的最大内存量来决定,建议至少预留1024MB供系统使用。

编辑nova-compute的配置文件nova.conf

|

reserved_host_memory_mb=4096 #单位MB |

重启nova_compute服务生效。

- cpu_allocation_ratio

配置vCPU的可超配比例。

- 推荐配置

根据ZHAOXIN CPU的性能,桌面云系统中,单个pCPU可以虚拟2个vCPU。

编辑nova-compute的配置文件nova.conf

|

[DEFAULT] cpu_allocation_ratio = 2 |

重启nova_compute服务生效。

- block_device_allocate_retries

创建有block device的虚拟机时,需要从blank、image或者snaphot创建volume。在volume被attach到虚拟机之前,其状态必须是“available”。block_device_allocate_retries指定nova检查volume状态是否“available”的次数。相关参数有block_device_allocate_retries_interval,指定检查状态的查询间隔,默认值3,单位s。

- 推荐配置

默认值是60次。当cinder负载较重时,60次查询之后可能volume的状态不是“available”,适当增加查询次数,避免虚拟机创建失败。

编辑nova-compute的配置文件nova.conf

|

[DEFAULT] block_device_allocate_retries = 150 |

重启nova_compute服务生效。

- vif_plugging_timeout

nova-compute等待Neutron VIF plugging event message arrival的超时时间。

- 推荐配置

默认值300,单位s。当创建虚拟机并发数高时,可能无法在300s内收到该event。兆芯桌面云系统测试200并发创建虚拟机时,耗时约360s,该值可以根据系统的最大并发数适当调整。

编辑nova-compute的配置文件nova.conf

|

[DEFAULT] vif_plugging_timeout = 500 |

重启nova_compute服务生效。

- Patch:热迁移性能

该补丁完成了两个功能:去掉迁移过程中不必要的get_volume_connect() 函数调用,以及减少不要的Neutron访问。该补丁能够让热迁移更高效,热迁移的无服务时间更短。补丁地址:

https://review.opendev.org/c/openstack/nova/+/795027

https://opendev.org/openstack/nova/commit/6488a5dfb293831a448596e2084f484dd0bfa916

补丁详情参见附件A1.2

4. Cinder

4.1 cinder-api

- Workers

参见nova-api。不同的是Stein版中cinder-api采用httpd部署,因此,其除了可以调优cinder支持的各种workers(如osapi_volume_workers),还可以调优cinder-wsgi.conf中的processes和threads。类似的组件还有keystone和horizon等。

编辑cinder-wsgi.conf

|

WSGIDaemonProcess cinder-api processes=12 threads=3 user=cinder group=cinder display-name=%{GROUP} python-path=/var/lib/kolla/venv/lib/python2.7/site-packages ……

|

重启cinder-api服务生效。

- rpc_response_timeout

Cinder-api等待RPC消息返回的超时时间

- 推荐配置

默认值 60,单位s。在高并发的attach_volume时,cinder-volume响应cinder-api的时间较长。如果报告rpc timeout的错误,可以适当调大该值。

编辑cinder-volume的配置文件cinder.conf

|

[DEFAULT] rpc_response_timeout = 600 |

重启cinder-api服务生效。

4.2 cinder-volume

- 心跳保持

和Rabbitmq之间

参考RabbitMQ章中的“心跳保持”小节。

Cinder的heartbeat_timeout_threshold用来设置心跳超时时间,会以1/2心跳超时时间为间隔发送心跳检测信号。

- 推荐配置

cinder-volume heartbeat_timeout_threshold默认值为60,单位为s,在负载重时可能无法在及时处理heartbeat消息而导致Rabbitmq Server没有在超时时间内收到心跳检测应答。Rabbitmq Server因Cinder-volume超时未应答而关闭连接,进而导致一系列错误。适当增加Cinder-volume和Rabbitmq Server的心跳超时时间以避免该错误,不建议禁用心跳检测机制(heartbeat=0)。

编辑cinder-volume的配置文件cinder.conf

|

[oslo_messaging_rabbit] heartbeat_timeout_threshold = 180 |

重启cinder-volume服务生效。

服务之间

OpenStack是一个分布式系统,由运行在不同主机上的各个服务组成来共同完成各项工作。每个服务都会定时向数据库中更新自己的update time,服务间可通过查询对方的update time是否超过设置的service_down_time来判断服务是否在线。这也可以看作是一种心跳机制。

在高负载时,数据库访问可能延迟增加,同时运行上报的周期任务会因CPU资源被占用导致延迟上报,这些都有可能引发误报service down。

- 推荐配置

report_interval:状态报告间隔,即心跳间隔,默认10,单位s。

service_down_time:距离上一次心跳的最长时间,默认60,单位s。超过这个时间没有心跳则认为服务Down。

report-interval一定要小于service_down_time。适当增加service_down_time,避免cinder-volume的周期性任务占用cpu导致没有及时报告状态而被误认为Down。

编辑cinder-volume的配置文件cinder.conf

|

service_down_time = 120 |

重启cinder_volume服务生效。

- rbd_exclusive_cinder_pool

OpenStack Ocata引入了参数rbd_exclusive_cinder_pool,如果RBD pool是Cinder独占,则可以设置rbd_exclusive_cinder_pool=true。Cinder用查询数据库的方式代替轮询后端所有volumes的方式获取provisioned size,这会明显减少查询时间,同时减轻Ceph 集群和Cinder-volume 服务的负载。

- 推荐配置

编辑cinder-volume的配置文件cinder.conf

|

[DEFAULT] Enable_backends =rbd-1 [rbd-1] rbd_exclusive_cinder_pool = true |

重启cinder-volume服务生效。

- image_volume_cache_enabled

从Liberty版本开始,Cinder能够使用image volume cahe,能够提高从image创建volume的性能。从image第一次创建volume的同时会创建属于快存储Internal Tenant的cached image-volume 。后续从该image创建volume时从cached image-volume 克隆,不需要将image 下载到本地再传入volume。

- 推荐配置

cinder_internal_tenant_project_id:指定OpenStack的项目“service”的ID

cinder_internal_tenant_user_id:指定OpenStack的用户“cinder”的ID

image_volume_cache_max_size_gb:指定cached image-volume的最大size,设置为0,即不对其限制。

image_volume_cache_max_count:指定cached image-volume的最大数量,设置为0,即不对其限制。

编辑cinder-volume的配置文件cinder.conf

|

[DEFAULT] cinder_internal_tenant_project_id = c4076a45bcac411bacf20eb4fecb50e0 cinder_internal_tenant_user_id = 4fe8e33010fd4263be493c1c9681bec8 [backend_defaults] image_volume_cache_enabled=True image_volume_cache_max_size_gb = 0 image_volume_cache_max_count = 0 |

重启cinder-volume服务生效。

5. Neutron

5.1 Neutron Service

neutron-service是neutron组件的api server,其配置优化参考nova-api中的介绍,可调整参数有api_workers和metadata_workers。

- rpc_workers

在neutron的设计架构上,核心服务和各plugin的处理是先主进程fork子进程,再在子进程中创建协程来运行处理程序,从而实现可利用到多核的并发处理。rpc_workers是用来控制为RPC处理创建的进程数量,默认值是api_workers的一半。由于我们的系统是基于容器部署,因此该值使用默认值即可。

5.2 Neutron DHCP Agent

这里的两个补丁主要影响网络节点上的neutron服务。

- 改善Network Port管理效率

Patch1

Neutron DHCP agent中用Pyroute2 的“ip route”命令替换oslo.rootwrap库中该linux命令。该补丁让Neutron DHCP agent创建和删除port时更加高效。补丁地址:

https://opendev.org/openstack/neutron/commit/06997136097152ea67611ec56b345e5867184df5

补丁详情参见附件A1.3。

Patch2

Neutron DHCP agent中用oslo.privsep库的“dhcp_release”命令替换oslo.rootwrap库该linux 命令。该补丁让Neutron DHCP agent创建和删除port时更加高效。补丁地址:

https://opendev.org/openstack/neutron/commit/e332054d63cfc6a2f8f65b1b9de192ae0df9ebb3

https://opendev.org/openstack/neutron/commit/2864957ca53a346418f89cc945bba5cdcf204799

补丁详情参见附件A1.4。

5.3 Neuton OpenvSwitch Agent

- 改善Network Port处理效率

polling_interval

Neutron L2 Agent如果配置的是openvswitch agent,neutron-openvswitch-agent启动后会运行一个RPC循环任务来处理端口添加、删除、修改。通过配置项polling_interval指定RPC循环执行的间隔。

- 推荐配置

默认值是2,单位s。减少该值可以使得端口状态更新更快,特别是热迁移过程中,减少该值可以减少迁移时间。但如果设置为0会导致neutron-openvswitch-agent占用过多的CPU资源。

编辑neutron-openvswitch-agent的配置文件ml2_conf.ini

|

[agent] polling_interval = 1 |

重启计算节点的neutron-openvswitch-agent服务生效。

Patch

Neutron openvswitch agent中用oslo.privsep库替换oslo.rootwrap库的“iptables”和“ipset”命令。该补丁能让Neutron openvswitch agent处理network port时更加高效。补丁地址:

https://opendev.org/openstack/neutron/commit/6c75316ca0a7ee2f6513bb6bc0797678ef419d24

https://opendev.org/openstack/neutron/commit/5a419cbc84e26b4a3b1d0dbe5166c1ab83cc825b

补丁详情参见附件A1.5。

5.4 热迁移Down Time时间优化

openstack stein版本在热迁移的测试中,虚机迁移到目标主机后,网络不能及时ping通,存在比较明显的延时现象。原因是虚机迁移成功后会立刻发送RARP广播,而此时虚机网卡对应的port还没真正up。Bug信息:

https://bugs.launchpad.net/neutron/+bug/1901707

https://bugs.launchpad.net/neutron/+bug/1815989

补丁详情参见附录B1.1--B1.7, 涉及neutron和nova模块:

https://review.opendev.org/c/openstack/neutron/+/790702

https://review.opendev.org/c/openstack/nova/+/767368

5.5 网络性能优化

网络为了获得稳定的高性能,在部署虚机时,网卡硬中断和对应虚机,最好限定在位于同一Cluster的CPU上,这样可以避免不必要的cache miss,进而提升网络的稳定性和性能。

5.6 VXLAN 性能优化

主流隧道网络普遍基于UDP协议实现,例如VXLAN,当UDP校验和字段为零时,会导致接收端在处理VXLAN报文时不能及时进行GRO(generic receive offload)处理,进而严重影响网络性能。该问题社区已经修正,具体信息可以参见下面链接:

打上该补丁后,万兆网卡情况下,同样的VXLAN iperf3测试,成绩可以提升2倍以上。

补丁详情参见附录C1.1

6. Keystone

- 并发进程数

WSGIDaemonProcess守护进程的配置参数。Processes定义了守护进程启动的进程数。

- 推荐配置

默认值为1。当keystone压力较大时,1个WSGI进程无法处理较大的并发数,适当增加processes的值,大于CPU cores number的意义不大。

编辑keystone的配置文件wsgi-keystone.conf

|

WSGIDaemonProcess keystone-public processes=12 threads=1 user=keystone group=keystone display-name=%{GROUP} python-path=/var/lib/kolla/venv/lib/python2.7/site-packages ……

WSGIDaemonProcess keystone-admin processes=12 threads=1 user=keystone group=keystone display-name=%{GROUP} python-path=/var/lib/kolla/venv/lib/python2.7/site-packages ……

|

重启keystone服务生效。

7. Haproxy

- Timeout设置

1、timeout http-request:HTTP请求的最大超时时间

2、timeout queue:当server端请求数量达到了maxconn,新到的connections会被添加到指定的queue。当requests在queue上等待超过timeout queue时,request被认为不被服务而丢弃,返回503 error给client端。

3、timeout connect :connection连接上后端服务器的超时时间。

4、timeout client :client端发送数据或者应答时,客户端最大的非活跃时间

5、timeout server:server端最大的非活跃时间

- 推荐配置

编辑haproxy的配置文件haproxy.cfg,单位s表示秒,单位m表示分。

|

defaults timeout http-request 100s timeout queue 4m timeout connect 100s timeout client 10m timeout server 10m。 |

重启haproxy服务生效。

- 最大连接数

Haproxy可以配置全局的maxconn定义Haproxy同时最大的连接数,也可以为后端服务配置maxconn定义该服务的最大连接数,可以为前端配置maxconn定义此端口的最大连接数。系统的ulimit -n的值一定要大于maxconn。

- 推荐配置

全局的maxconn默认值为4000,应用过程中在Haproxy的控制台观察到全局连接数不够,将其增加到40000。

编辑Haproxy的配置文件haproxy.cfg

|

global maxconn 40000 |

重启haproxy服务生效。

- 处理线程数

设置haproxy的负责均衡并发进程数,OpenStack Stein的Haproxy 版本为1.5.18。该参数在haproxy 2.5 版本中已移除,由nbthread参数指定线程数量。

- 推荐配置

由于Haproxy的负载较重,推荐适当增大该参数。

编辑Haproxy的配置文件haproxy.cfg

|

global nbproc 4 |

重启haproxy服务生效。

- 权重

Haproxy后端配置参数weight可以配置server的权重,取值范围0-256,权重越大,分给这个server的请求就越多。weight为0的server将不会被分配任何新的连接。所有server的默认值为1。

- 推荐配置

当多个后端的主机压力不一致时,可以将压力大的主机上的server的权重适当减少,从而使各主机负载均衡。

以3 控制节点的小集群为例:测试过程中,controller03上整体cpu使用率较高达95%+,其他两个控制节点cpu使用率约在70%,各个控制节点keystone的 cpu使用率均较高。减少controller03上keystone server的权重,从而减少controller03的cpu压力。

编辑Haproxy的配置文件keystone.cfg

|

listen keystone_external mode http http-request del-header X-Forwarded-Proto option httplog option forwardfor http-request set-header X-Forwarded-Proto https if { ssl_fc } bind haproxy-ip-addr:5000 maxconn 5000 server controller01 server-ip-addr:5000 check inter 2000 rise 2 fall 5 maxconn 3000 weight 10 server controller02 server-ip-addr:5000 check inter 2000 rise 2 fall 5 maxconn 3000 weight 10 server controller03 server-ip-addr:5000 check inter 2000 rise 2 fall 5 maxconn 3000 weight 9 |

重启haproxy服务生效。

~~~~~~~~~~~~~~~~~~

附录

A1.1

|

diff -ruN nova-bak/api/openstack/compute/migrate_server.py nova/api/openstack/compute/migrate_server.py --- nova-bak/api/openstack/compute/migrate_server.py 2021-09-10 11:20:15.774990677 +0800 +++ nova/api/openstack/compute/migrate_server.py 2021-09-10 11:23:22.239098421 +0800 @@ -157,7 +157,9 @@ 'conductor during pre-live-migration checks ' ''%(ex)s'', {'ex': ex}) else: - raise exc.HTTPBadRequest(explanation=ex.format_message()) + raise exc.HTTPBadRequest(explanation=ex.format_message()) + except exception.OperationNotSupportedForSEV as e: + raise exc.HTTPConflict(explanation=e.format_message()) except exception.InstanceIsLocked as e: raise exc.HTTPConflict(explanation=e.format_message()) except exception.ComputeHostNotFound as e: diff -ruN nova-bak/api/openstack/compute/suspend_server.py nova/api/openstack/compute/suspend_server.py --- nova-bak/api/openstack/compute/suspend_server.py 2021-09-10 11:25:03.847439106 +0800 +++ nova/api/openstack/compute/suspend_server.py 2021-09-10 11:27:09.958950964 +0800 @@ -40,7 +40,8 @@ self.compute_api.suspend(context, server) except exception.InstanceUnknownCell as e: raise exc.HTTPNotFound(explanation=e.format_message()) - except exception.InstanceIsLocked as e: + except (exception.OperationNotSupportedForSEV, + exception.InstanceIsLocked) as e: raise exc.HTTPConflict(explanation=e.format_message()) except exception.InstanceInvalidState as state_error: common.raise_http_conflict_for_instance_invalid_state(state_error, diff -ruN nova-bak/compute/api.py nova/compute/api.py --- nova-bak/compute/api.py 2021-09-10 11:31:55.278077457 +0800 +++ nova/compute/api.py 2021-09-10 15:32:28.131175652 +0800 @@ -215,6 +215,23 @@ return fn(self, context, instance, *args, **kwargs) return _wrapped

+def reject_sev_instances(operation): + '''Decorator. Raise OperationNotSupportedForSEV if instance has SEV + enabled. + ''' + + def outer(f): + @six.wraps(f) + def inner(self, context, instance, *args, **kw): + if hardware.get_mem_encryption_constraint(instance.flavor, + instance.image_meta): + raise exception.OperationNotSupportedForSEV( + instance_uuid=instance.uuid, + operation=operation) + return f(self, context, instance, *args, **kw) + return inner + return outer +

def _diff_dict(orig, new): '''Return a dict describing how to change orig to new. The keys @@ -690,6 +707,9 @@ ''' image_meta = _get_image_meta_obj(image)

+ API._validate_flavor_image_mem_encryption(instance_type, image_meta) + + # Only validate values of flavor/image so the return results of # following 'get' functions are not used. hardware.get_number_of_serial_ports(instance_type, image_meta) @@ -701,6 +721,19 @@ if validate_pci: pci_request.get_pci_requests_from_flavor(instance_type)

+ @staticmethod + def _validate_flavor_image_mem_encryption(instance_type, image): + '''Validate that the flavor and image don't make contradictory + requests regarding memory encryption. + :param instance_type: Flavor object + :param image: an ImageMeta object + :raises: nova.exception.FlavorImageConflict + ''' + # This library function will raise the exception for us if + # necessary; if not, we can ignore the result returned. + hardware.get_mem_encryption_constraint(instance_type, image) + + def _get_image_defined_bdms(self, instance_type, image_meta, root_device_name): image_properties = image_meta.get('properties', {}) @@ -3915,6 +3948,7 @@ return self.compute_rpcapi.get_instance_diagnostics(context, instance=instance)

+ @reject_sev_instances(instance_actions.SUSPEND) @check_instance_lock @check_instance_cell @check_instance_state(vm_state=[vm_states.ACTIVE]) @@ -4699,6 +4733,7 @@ diff=diff) return _metadata

+ @reject_sev_instances(instance_actions.SUSPEND) @check_instance_lock @check_instance_cell @check_instance_state(vm_state=[vm_states.ACTIVE, vm_states.PAUSED]) diff -ruN nova-bak/exception.py nova/exception.py --- nova-bak/exception.py 2021-09-10 11:35:25.491284738 +0800 +++ nova/exception.py 2021-09-10 11:36:09.799787563 +0800 @@ -536,6 +536,10 @@ msg_fmt = _('Unable to migrate instance (%(instance_id)s) ' 'to current host (%(host)s).')

+class OperationNotSupportedForSEV(NovaException): + msg_fmt = _('Operation '%(operation)s' not supported for SEV-enabled ' + 'instance (%(instance_uuid)s).') + code = 409

class InvalidHypervisorType(Invalid): msg_fmt = _('The supplied hypervisor type of is invalid.') diff -ruN nova-bak/objects/image_meta.py nova/objects/image_meta.py --- nova-bak/objects/image_meta.py 2021-09-10 15:16:30.530628464 +0800 +++ nova/objects/image_meta.py 2021-09-10 15:19:26.999151245 +0800 @@ -177,6 +177,9 @@ super(ImageMetaProps, self).obj_make_compatible(primitive, target_version) target_version = versionutils.convert_version_to_tuple(target_version) + + if target_version < (1, 24): + primitive.pop('hw_mem_encryption', None) if target_version < (1, 21): primitive.pop('hw_time_hpet', None) if target_version < (1, 20): @@ -298,6 +301,11 @@ # is not practical to enumerate them all. So we use a free # form string 'hw_machine_type': fields.StringField(), + + # boolean indicating that the guest needs to be booted with + # encrypted memory + 'hw_mem_encryption': fields.FlexibleBooleanField(), +

# One of the magic strings 'small', 'any', 'large' # or an explicit page size in KB (eg 4, 2048, ...) diff -ruN nova-bak/scheduler/utils.py nova/scheduler/utils.py --- nova-bak/scheduler/utils.py 2021-09-10 15:19:58.172561042 +0800 +++ nova/scheduler/utils.py 2021-09-10 15:35:05.630393147 +0800 @@ -35,7 +35,7 @@ from nova.objects import instance as obj_instance from nova import rpc from nova.scheduler.filters import utils as filters_utils - +import nova.virt.hardware as hw

LOG = logging.getLogger(__name__)

@@ -61,6 +61,27 @@ # Default to the configured limit but _limit can be # set to None to indicate 'no limit'. self._limit = CONF.scheduler.max_placement_results + image = (request_spec.image if 'image' in request_spec + else objects.ImageMeta(properties=objects.ImageMetaProps())) + self._translate_memory_encryption(request_spec.flavor, image) + + def _translate_memory_encryption(self, flavor, image): + '''When the hw:mem_encryption extra spec or the hw_mem_encryption + image property are requested, translate into a request for + resources:MEM_ENCRYPTION_CONTEXT=1 which requires a slot on a + host which can support encryption of the guest memory. + ''' + # NOTE(aspiers): In theory this could raise FlavorImageConflict, + # but we already check it in the API layer, so that should never + # happen. + if not hw.get_mem_encryption_constraint(flavor, image): + # No memory encryption required, so no further action required. + return + + self._add_resource(None, orc.MEM_ENCRYPTION_CONTEXT, 1) + LOG.debug('Added %s=1 to requested resources', + orc.MEM_ENCRYPTION_CONTEXT) +

def __str__(self): return ', '.join(sorted( diff -ruN nova-bak/virt/hardware.py nova/virt/hardware.py --- nova-bak/virt/hardware.py 2022-02-23 10:45:42.320988102 +0800 +++ nova/virt/hardware.py 2021-09-10 14:05:25.145572630 +0800 @@ -1140,6 +1140,67 @@

return flavor_policy, image_policy

+def get_mem_encryption_constraint(flavor, image_meta, machine_type=None): + '''Return a boolean indicating whether encryption of guest memory was + requested, either via the hw:mem_encryption extra spec or the + hw_mem_encryption image property (or both). + Also watch out for contradictory requests between the flavor and + image regarding memory encryption, and raise an exception where + encountered. These conflicts can arise in two different ways: + 1) the flavor requests memory encryption but the image + explicitly requests *not* to have memory encryption, or + vice-versa + 2) the flavor and/or image request memory encryption, but the + image is missing hw_firmware_type=uefi + 3) the flavor and/or image request memory encryption, but the + machine type is set to a value which does not contain 'q35' + This can be called from the libvirt driver on the compute node, in + which case the driver should pass the result of + nova.virt.libvirt.utils.get_machine_type() as the machine_type + parameter, or from the API layer, in which case get_machine_type() + cannot be called since it relies on being run from the compute + node in order to retrieve CONF.libvirt.hw_machine_type. + :param instance_type: Flavor object + :param image: an ImageMeta object + :param machine_type: a string representing the machine type (optional) + :raises: nova.exception.FlavorImageConflict + :raises: nova.exception.InvalidMachineType + :returns: boolean indicating whether encryption of guest memory + was requested + ''' + + flavor_mem_enc_str, image_mem_enc = _get_flavor_image_meta( + 'mem_encryption', flavor, image_meta) + + flavor_mem_enc = None + if flavor_mem_enc_str is not None: + flavor_mem_enc = strutils.bool_from_string(flavor_mem_enc_str) + + # Image property is a FlexibleBooleanField, so coercion to a + # boolean is handled automatically + + if not flavor_mem_enc and not image_mem_enc: + return False + + _check_for_mem_encryption_requirement_conflicts( + flavor_mem_enc_str, flavor_mem_enc, image_mem_enc, flavor, image_meta) + + # If we get this far, either the extra spec or image property explicitly + # specified a requirement regarding memory encryption, and if both did, + # they are asking for the same thing. + requesters = [] + if flavor_mem_enc: + requesters.append('hw:mem_encryption extra spec in %s flavor' % + flavor.name) + if image_mem_enc: + requesters.append('hw_mem_encryption property of image %s' % + image_meta.name) + + _check_mem_encryption_uses_uefi_image(requesters, image_meta) + _check_mem_encryption_machine_type(image_meta, machine_type) + + LOG.debug('Memory encryption requested by %s', ' and '.join(requesters)) + return True

def _get_numa_pagesize_constraint(flavor, image_meta): '''Return the requested memory page size |

A1.2

|

diff -ruN nova-bak/compute/manager.py nova/compute/manager.py --- nova-bak/compute/manager.py 2021-07-07 14:40:15.570807168 +0800 +++ nova/compute/manager.py 2021-10-18 19:02:37.931655551 +0800 @@ -7013,7 +7013,8 @@ migrate_data)

# Detaching volumes. - connector = self.driver.get_volume_connector(instance) + connector = None + #connector = self.driver.get_volume_connector(instance) for bdm in source_bdms: if bdm.is_volume: # Detaching volumes is a call to an external API that can fail. @@ -7033,6 +7034,8 @@ # remove the volume connection without detaching from # hypervisor because the instance is not running # anymore on the current host + if connector is None: + connector = self.driver.get_volume_connector(instance) self.volume_api.terminate_connection(ctxt, bdm.volume_id, connector) @@ -7056,8 +7059,10 @@

# Releasing vlan. # (not necessary in current implementation?) - - network_info = self.network_api.get_instance_nw_info(ctxt, instance) + + #changed by Fiona + #network_info = self.network_api.get_instance_nw_info(ctxt, instance) + network_info = instance.get_network_info()

self._notify_about_instance_usage(ctxt, instance, 'live_migration._post.start', |

A1.3

|

diff -ruN neutron-bak/agent/l3/router_info.py neutron-iproute/agent/l3/router_info.py --- neutron-bak/agent/l3/router_info.py 2020-12-14 18:00:23.683687327 +0800 +++ neutron-iproute/agent/l3/router_info.py 2022-02-23 15:18:15.650669589 +0800 @@ -748,8 +748,10 @@ for ip_version in (lib_constants.IP_VERSION_4, lib_constants.IP_VERSION_6): gateway = device.route.get_gateway(ip_version=ip_version) - if gateway and gateway.get('gateway'): - current_gateways.add(gateway.get('gateway')) +# if gateway and gateway.get('gateway'): +# current_gateways.add(gateway.get('gateway')) + if gateway and gateway.get('via'): + current_gateways.add(gateway.get('via')) for ip in current_gateways - set(gateway_ips): device.route.delete_gateway(ip) for ip in gateway_ips: diff -ruN neutron-bak/agent/linux/ip_lib.py neutron-iproute/agent/linux/ip_lib.py --- neutron-bak/agent/linux/ip_lib.py 2020-12-14 18:03:47.951878754 +0800 +++ neutron-iproute/agent/linux/ip_lib.py 2022-02-23 15:19:03.981457532 +0800 @@ -48,6 +48,8 @@ 'main': 254, 'local': 255}

+IP_RULE_TABLES_NAMES = {v: k for k, v in IP_RULE_TABLES.items()} + # Rule indexes: pyroute2.netlink.rtnl # Rule names: https://www.systutorials.com/docs/linux/man/8-ip-rule/ # NOTE(ralonsoh): 'masquerade' type is printed as 'nat' in 'ip rule' command @@ -592,14 +594,18 @@ def _dev_args(self): return ['dev', self.name] if self.name else []

- def add_gateway(self, gateway, metric=None, table=None): - ip_version = common_utils.get_ip_version(gateway) - args = ['replace', 'default', 'via', gateway] - if metric: - args += ['metric', metric] - args += self._dev_args() - args += self._table_args(table) - self._as_root([ip_version], tuple(args)) +# def add_gateway(self, gateway, metric=None, table=None): +# ip_version = common_utils.get_ip_version(gateway) +# args = ['replace', 'default', 'via', gateway] +# if metric: +# args += ['metric', metric] +# args += self._dev_args() +# args += self._table_args(table) +# self._as_root([ip_version], tuple(args)) + + def add_gateway(self, gateway, metric=None, table=None, scope='global'): + self.add_route(None, via=gateway, table=table, metric=metric, + scope=scope)

def _run_as_root_detect_device_not_found(self, options, args): try: @@ -618,41 +624,16 @@ args += self._table_args(table) self._run_as_root_detect_device_not_found([ip_version], args)

- def _parse_routes(self, ip_version, output, **kwargs): - for line in output.splitlines(): - parts = line.split() -

- # Format of line is: ' - route = {k: v for k, v in zip(parts[1::2], parts[2::2])} - route['cidr'] = parts[0] - # Avoids having to explicitly pass around the IP version - if route['cidr'] == 'default': - route['cidr'] = constants.IP_ANY[ip_version] - - # ip route drops things like scope and dev from the output if it - # was specified as a filter. This allows us to add them back. - if self.name: - route['dev'] = self.name - if self._table: - route['table'] = self._table - # Callers add any filters they use as kwargs - route.update(kwargs) - - yield route - - def list_routes(self, ip_version, **kwargs): - args = ['list'] - args += self._dev_args() - args += self._table_args() - for k, v in kwargs.items(): - args += [k, v] - - output = self._run([ip_version], tuple(args)) - return [r for r in self._parse_routes(ip_version, output, **kwargs)] + def list_routes(self, ip_version, scope=None, via=None, table=None, + **kwargs): + table = table or self._table + return list_ip_routes(self._parent.namespace, ip_version, scope=scope, + via=via, table=table, device=self.name, **kwargs)

def list_onlink_routes(self, ip_version): routes = self.list_routes(ip_version, scope='link') - return [r for r in routes if 'src' not in r] +# return [r for r in routes if 'src' not in r] + return [r for r in routes if not r['source_prefix']]

def add_onlink_route(self, cidr): self.add_route(cidr, scope='link') @@ -660,34 +641,12 @@ def delete_onlink_route(self, cidr): self.delete_route(cidr, scope='link')

- def get_gateway(self, scope=None, filters=None, ip_version=None): - options = [ip_version] if ip_version else [] - - args = ['list'] - args += self._dev_args() - args += self._table_args() - if filters: - args += filters - - retval = None - - if scope: - args += ['scope', scope] - - route_list_lines = self._run(options, tuple(args)).split('\n') - default_route_line = next((x.strip() for x in - route_list_lines if - x.strip().startswith('default')), None) - if default_route_line: - retval = dict() - gateway = DEFAULT_GW_PATTERN.search(default_route_line) - if gateway: - retval.update(gateway=gateway.group(1)) - metric = METRIC_PATTERN.search(default_route_line) - if metric: - retval.update(metric=int(metric.group(1))) - - return retval + def get_gateway(self, scope=None, table=None, + ip_version=constants.IP_VERSION_4): + routes = self.list_routes(ip_version, scope=scope, table=table) + for route in routes: + if route['via'] and route['cidr'] in constants.IP_ANY.values(): + return route

def flush(self, ip_version, table=None, **kwargs): args = ['flush'] @@ -696,16 +655,11 @@ args += [k, v] self._as_root([ip_version], tuple(args))

- def add_route(self, cidr, via=None, table=None, **kwargs): - ip_version = common_utils.get_ip_version(cidr) - args = ['replace', cidr] - if via: - args += ['via', via] - args += self._dev_args() - args += self._table_args(table) - for k, v in kwargs.items(): - args += [k, v] - self._run_as_root_detect_device_not_found([ip_version], args) + def add_route(self, cidr, via=None, table=None, metric=None, scope=None, + **kwargs): + table = table or self._table + add_ip_route(self._parent.namespace, cidr, device=self.name, via=via, + table=table, metric=metric, scope=scope, **kwargs)

def delete_route(self, cidr, via=None, table=None, **kwargs): ip_version = common_utils.get_ip_version(cidr) @@ -1455,3 +1409,53 @@ retval[device['vxlan_link_index']]['name'])

return list(retval.values()) + +def add_ip_route(namespace, cidr, device=None, via=None, table=None, + metric=None, scope=None, **kwargs): + '''Add an IP route''' + if table: + table = IP_RULE_TABLES.get(table, table) + ip_version = common_utils.get_ip_version(cidr or via) + privileged.add_ip_route(namespace, cidr, ip_version, + device=device, via=via, table=table, + metric=metric, scope=scope, **kwargs) + + +def list_ip_routes(namespace, ip_version, scope=None, via=None, table=None, + device=None, **kwargs): + '''List IP routes''' + def get_device(index, devices): + for device in (d for d in devices if d['index'] == index): + return get_attr(device, 'IFLA_IFNAME') + + table = table if table else 'main' + table = IP_RULE_TABLES.get(table, table) + routes = privileged.list_ip_routes(namespace, ip_version, device=device, + table=table, **kwargs) + devices = privileged.get_link_devices(namespace) + ret = [] + for route in routes: + cidr = get_attr(route, 'RTA_DST') + if cidr: + cidr = '%s/%s' % (cidr, route['dst_len']) + else: + cidr = constants.IP_ANY[ip_version] + table = int(get_attr(route, 'RTA_TABLE')) + value = { + 'table': IP_RULE_TABLES_NAMES.get(table, table), + 'source_prefix': get_attr(route, 'RTA_PREFSRC'), + 'cidr': cidr, + 'scope': IP_ADDRESS_SCOPE[int(route['scope'])], + 'device': get_device(int(get_attr(route, 'RTA_OIF')), devices), + 'via': get_attr(route, 'RTA_GATEWAY'), + 'priority': get_attr(route, 'RTA_PRIORITY'), + } + + ret.append(value) + + if scope: + ret = [route for route in ret if route['scope'] == scope] + if via: + ret = [route for route in ret if route['via'] == via] + + return ret diff -ruN neutron-bak/cmd/sanity/checks.py neutron-iproute/cmd/sanity/checks.py --- neutron-bak/cmd/sanity/checks.py 2022-02-23 11:33:16.934132708 +0800 +++ neutron-iproute/cmd/sanity/checks.py 2022-02-23 15:20:10.562018672 +0800 @@ -36,6 +36,7 @@ from neutron.common import utils as common_utils from neutron.plugins.ml2.drivers.openvswitch.agent.common \ import constants as ovs_const +from neutron.privileged.agent.linux import dhcp as priv_dhcp

LOG = logging.getLogger(__name__)

@@ -230,8 +231,8 @@

def dhcp_release6_supported(): - return runtime_checks.dhcp_release6_supported() - +# return runtime_checks.dhcp_release6_supported() + return priv_dhcp.dhcp_release6_supported()

def bridge_firewalling_enabled(): for proto in ('arp', 'ip', 'ip6'): @@ -363,7 +364,8 @@

default_gw = gw_dev.route.get_gateway(ip_version=6) if default_gw: - default_gw = default_gw['gateway'] +# default_gw = default_gw['gateway'] + default_gw = default_gw['via']

return expected_default_gw == default_gw

diff -ruN neutron-bak/privileged/agent/linux/ip_lib.py neutron-iproute/privileged/agent/linux/ip_lib.py --- neutron-bak/privileged/agent/linux/ip_lib.py 2020-12-14 18:26:08.339307939 +0800 +++ neutron-iproute/privileged/agent/linux/ip_lib.py 2022-02-23 15:20:39.477439105 +0800 @@ -634,3 +634,50 @@ if e.errno == errno.ENOENT: raise NetworkNamespaceNotFound(netns_name=namespace) raise + +@privileged.default.entrypoint +@lockutils.synchronized('privileged-ip-lib') +def add_ip_route(namespace, cidr, ip_version, device=None, via=None, + table=None, metric=None, scope=None, **kwargs): + '''Add an IP route''' + try: + with get_iproute(namespace) as ip: + family = _IP_VERSION_FAMILY_MAP[ip_version] + if not scope: + scope = 'global' if via else 'link' + scope = _get_scope_name(scope) + if cidr: + kwargs['dst'] = cidr + if via: + kwargs['gateway'] = via + if table: + kwargs['table'] = int(table) + if device: + kwargs['oif'] = get_link_id(device, namespace) + if metric: + kwargs['priority'] = int(metric) + ip.route('replace', family=family, scope=scope, proto='static', + **kwargs) + except OSError as e: + if e.errno == errno.ENOENT: + raise NetworkNamespaceNotFound(netns_name=namespace) + raise + + +@privileged.default.entrypoint +@lockutils.synchronized('privileged-ip-lib') +def list_ip_routes(namespace, ip_version, device=None, table=None, **kwargs): + '''List IP routes''' + try: + with get_iproute(namespace) as ip: + family = _IP_VERSION_FAMILY_MAP[ip_version] + if table: + kwargs['table'] = table + if device: + kwargs['oif'] = get_link_id(device, namespace) + return make_serializable(ip.route('show', family=family, **kwargs)) + except OSError as e: + if e.errno == errno.ENOENT: + raise NetworkNamespaceNotFound(netns_name=namespace) + raise + |

A1.4

|

diff -ruN neutron-bak/agent/linux/dhcp.py neutron-dhcprelease/agent/linux/dhcp.py --- neutron-bak/agent/linux/dhcp.py 2020-12-15 09:59:29.966957908 +0800 +++ neutron-dhcprelease/agent/linux/dhcp.py 2022-02-23 15:10:14.169101010 +0800 @@ -25,6 +25,7 @@ from neutron_lib import constants from neutron_lib import exceptions from neutron_lib.utils import file as file_utils +from oslo_concurrency import processutils from oslo_log import log as logging from oslo_utils import excutils from oslo_utils import fileutils @@ -41,6 +42,7 @@ from neutron.common import ipv6_utils from neutron.common import utils as common_utils from neutron.ipam import utils as ipam_utils +from neutron.privileged.agent.linux import dhcp as priv_dhcp

LOG = logging.getLogger(__name__)

@@ -476,7 +478,8 @@

def _is_dhcp_release6_supported(self): if self._IS_DHCP_RELEASE6_SUPPORTED is None: - self._IS_DHCP_RELEASE6_SUPPORTED = checks.dhcp_release6_supported() + self._IS_DHCP_RELEASE6_SUPPORTED = ( + priv_dhcp.dhcp_release6_supported()) if not self._IS_DHCP_RELEASE6_SUPPORTED: LOG.warning('dhcp_release6 is not present on this system, ' 'will not call it again.') @@ -485,24 +488,28 @@ def _release_lease(self, mac_address, ip, ip_version, client_id=None, server_id=None, iaid=None): '''Release a DHCP lease.''' - if ip_version == constants.IP_VERSION_6: - if not self._is_dhcp_release6_supported(): - return - cmd = ['dhcp_release6', '--iface', self.interface_name, - '--ip', ip, '--client-id', client_id, - '--server-id', server_id, '--iaid', iaid] - else: - cmd = ['dhcp_release', self.interface_name, ip, mac_address] - if client_id: - cmd.append(client_id) - ip_wrapper = ip_lib.IPWrapper(namespace=self.network.namespace) try: - ip_wrapper.netns.execute(cmd, run_as_root=True) - except RuntimeError as e: + if ip_version == constants.IP_VERSION_6: + if not self._is_dhcp_release6_supported(): + return + + params = {'interface_name': self.interface_name, + 'ip_address': ip, 'client_id': client_id, + 'server_id': server_id, 'iaid': iaid, + 'namespace': self.network.namespace} + priv_dhcp.dhcp_release6(**params) + else: + params = {'interface_name': self.interface_name, + 'ip_address': ip, 'mac_address': mac_address, + 'client_id': client_id, + 'namespace': self.network.namespace} +# LOG.info('Rock_DEBUG: DHCP release construct params %(params)s.', {'params': params}) + priv_dhcp.dhcp_release(**params) + except (processutils.ProcessExecutionError, OSError) as e: # when failed to release single lease there's # no need to propagate error further - LOG.warning('DHCP release failed for %(cmd)s. ' - 'Reason: %(e)s', {'cmd': cmd, 'e': e}) + LOG.warning('DHCP release failed for params %(params)s. ' + 'Reason: %(e)s', {'params': params, 'e': e})

def _output_config_files(self): self._output_hosts_file() diff -ruN neutron-bak/cmd/sanity/checks.py neutron-dhcprelease/cmd/sanity/checks.py --- neutron-bak/cmd/sanity/checks.py 2022-02-23 11:33:16.934132708 +0800 +++ neutron-dhcprelease/cmd/sanity/checks.py 2022-02-23 15:11:07.536446402 +0800 @@ -36,6 +36,7 @@ from neutron.common import utils as common_utils from neutron.plugins.ml2.drivers.openvswitch.agent.common \ import constants as ovs_const +from neutron.privileged.agent.linux import dhcp as priv_dhcp

LOG = logging.getLogger(__name__)

@@ -230,8 +231,8 @@

def dhcp_release6_supported(): - return runtime_checks.dhcp_release6_supported() - +# return runtime_checks.dhcp_release6_supported() + return priv_dhcp.dhcp_release6_supported()

def bridge_firewalling_enabled(): for proto in ('arp', 'ip', 'ip6'): @@ -363,7 +364,8 @@

default_gw = gw_dev.route.get_gateway(ip_version=6) if default_gw: - default_gw = default_gw['gateway'] +# default_gw = default_gw['gateway'] + default_gw = default_gw['via']

return expected_default_gw == default_gw

diff -ruN neutron-bak/privileged/__init__.py neutron-dhcprelease/privileged/__init__.py --- neutron-bak/privileged/__init__.py 2020-04-23 14:45:14.000000000 +0800 +++ neutron-dhcprelease/privileged/__init__.py 2022-02-23 15:10:29.209584186 +0800 @@ -27,3 +27,11 @@ caps.CAP_DAC_OVERRIDE, caps.CAP_DAC_READ_SEARCH], ) + +dhcp_release_cmd = priv_context.PrivContext( + __name__, + cfg_section='privsep_dhcp_release', + pypath=__name__ + '.dhcp_release_cmd', + capabilities=[caps.CAP_SYS_ADMIN, + caps.CAP_NET_ADMIN] +) |

A1.5

|

diff -ruN neutron-bak/agent/linux/ipset_manager.py neutron/agent/linux/ipset_manager.py --- neutron-bak/agent/linux/ipset_manager.py 2022-02-16 15:11:40.419016919 +0800 +++ neutron/agent/linux/ipset_manager.py 2022-02-16 15:17:02.328133786 +0800 @@ -146,7 +146,7 @@ cmd_ns.extend(['ip', 'netns', 'exec', self.namespace]) cmd_ns.extend(cmd) self.execute(cmd_ns, run_as_root=True, process_input=input, - check_exit_code=fail_on_errors) + check_exit_code=fail_on_errors, privsep_exec=True)

def _get_new_set_ips(self, set_name, expected_ips): new_member_ips = (set(expected_ips) - diff -ruN neutron-bak/agent/linux/iptables_manager.py neutron/agent/linux/iptables_manager.py --- neutron-bak/agent/linux/iptables_manager.py 2022-02-16 15:05:53.853147520 +0800 +++ neutron/agent/linux/iptables_manager.py 2021-07-07 14:59:16.000000000 +0800 @@ -475,12 +475,15 @@ args = ['iptables-save', '-t', table] if self.namespace: args = ['ip', 'netns', 'exec', self.namespace] + args - return self.execute(args, run_as_root=True).split('\n') + #return self.execute(args, run_as_root=True).split('\n') + return self.execute(args, run_as_root=True, + privsep_exec=True).split('\n')

def _get_version(self): # Output example is 'iptables v1.6.2' args = ['iptables', '--version'] - version = str(self.execute(args, run_as_root=True).split()[1][1:]) + #version = str(self.execute(args, run_as_root=True).split()[1][1:]) + version = str(self.execute(args, run_as_root=True, privsep_exec=True).split()[1][1:]) LOG.debug('IPTables version installed: %s', version) return version

@@ -505,8 +508,10 @@ args += ['-w', self.xlock_wait_time, '-W', XLOCK_WAIT_INTERVAL] try: kwargs = {} if lock else {'log_fail_as_error': False} + #self.execute(args, process_input='\n'.join(commands), + # run_as_root=True, **kwargs) self.execute(args, process_input='\n'.join(commands), - run_as_root=True, **kwargs) + run_as_root=True, privsep_exec=True, **kwargs) except RuntimeError as error: return error

@@ -568,7 +573,8 @@ if self.namespace: args = ['ip', 'netns', 'exec', self.namespace] + args try: - save_output = self.execute(args, run_as_root=True) + #save_output = self.execute(args, run_as_root=True) + save_output = self.execute(args, run_as_root=True, privsep_exec=True) except RuntimeError: # We could be racing with a cron job deleting namespaces. # It is useless to try to apply iptables rules over and @@ -769,7 +775,8 @@ args.append('-Z') if self.namespace: args = ['ip', 'netns', 'exec', self.namespace] + args - current_table = self.execute(args, run_as_root=True) + #current_table = self.execute(args, run_as_root=True) + current_table = self.execute(args, run_as_root=True, privsep_exec=True) current_lines = current_table.split('\n')

for line in current_lines[2:]: diff -ruN neutron-bak/agent/linux/utils.py neutron/agent/linux/utils.py --- neutron-bak/agent/linux/utils.py 2022-02-16 15:06:03.133090388 +0800 +++ neutron/agent/linux/utils.py 2021-07-08 09:34:12.000000000 +0800 @@ -38,6 +38,7 @@ from neutron.agent.linux import xenapi_root_helper from neutron.common import utils from neutron.conf.agent import common as config +from neutron.privileged.agent.linux import utils as priv_utils from neutron import wsgi

@@ -85,13 +86,24 @@ if run_as_root: cmd = shlex.split(config.get_root_helper(cfg.CONF)) + cmd LOG.debug('Running command: %s', cmd) - obj = utils.subprocess_popen(cmd, shell=False, - stdin=subprocess.PIPE, - stdout=subprocess.PIPE, - stderr=subprocess.PIPE) + #obj = utils.subprocess_popen(cmd, shell=False, + # stdin=subprocess.PIPE, + # stdout=subprocess.PIPE, + # stderr=subprocess.PIPE) + obj = subprocess.Popen(cmd, shell=False, stdin=subprocess.PIPE, + stdout=subprocess.PIPE, stderr=subprocess.PIPE)

return obj, cmd

+def _execute_process(cmd, _process_input, addl_env, run_as_root): + obj, cmd = create_process(cmd, run_as_root=run_as_root, addl_env=addl_env) + _stdout, _stderr = obj.communicate(_process_input) + returncode = obj.returncode + obj.stdin.close() + _stdout = helpers.safe_decode_utf8(_stdout) + _stderr = helpers.safe_decode_utf8(_stderr) + return _stdout, _stderr, returncode +

def execute_rootwrap_daemon(cmd, process_input, addl_env): cmd = list(map(str, addl_env_args(addl_env) + cmd)) @@ -103,31 +115,45 @@ LOG.debug('Running command (rootwrap daemon): %s', cmd) client = RootwrapDaemonHelper.get_client() try: - return client.execute(cmd, process_input) + #return client.execute(cmd, process_input) + returncode, __stdout, _stderr = client.execute(cmd, process_input) except Exception: with excutils.save_and_reraise_exception(): LOG.error('Rootwrap error running command: %s', cmd) + _stdout = helpers.safe_decode_utf8(_stdout) + _stderr = helpers.safe_decode_utf8(_stderr) + return _stdout, _stderr, returncode

def execute(cmd, process_input=None, addl_env=None, check_exit_code=True, return_stderr=False, log_fail_as_error=True, - extra_ok_codes=None, run_as_root=False): + extra_ok_codes=None, run_as_root=False, privsep_exec=False): try: if process_input is not None: _process_input = encodeutils.to_utf8(process_input) else: _process_input = None - if run_as_root and cfg.CONF.AGENT.root_helper_daemon: - returncode, _stdout, _stderr = ( - execute_rootwrap_daemon(cmd, process_input, addl_env)) + #if run_as_root and cfg.CONF.AGENT.root_helper_daemon: + # returncode, _stdout, _stderr = ( + # execute_rootwrap_daemon(cmd, process_input, addl_env)) + #else: + # obj, cmd = create_process(cmd, run_as_root=run_as_root, + # addl_env=addl_env) + # _stdout, _stderr = obj.communicate(_process_input) + # returncode = obj.returncode + # obj.stdin.close() + #_stdout = helpers.safe_decode_utf8(_stdout) + #_stderr = helpers.safe_decode_utf8(_stderr) + + if run_as_root and privsep_exec: + _stdout, _stderr, returncode = priv_utils.execute_process( + cmd, _process_input, addl_env) + elif run_as_root and cfg.CONF.AGENT.root_helper_daemon: + _stdout, _stderr, returncode = execute_rootwarp_daemon( + cmd, process_input, addl_env) else: - obj, cmd = create_process(cmd, run_as_root=run_as_root, - addl_env=addl_env) - _stdout, _stderr = obj.communicate(_process_input) - returncode = obj.returncode - obj.stdin.close() - _stdout = helpers.safe_decode_utf8(_stdout) - _stderr = helpers.safe_decode_utf8(_stderr) + _stdout, _stderr, returncode = _execute_process( + cmd, _process_input, addl_env, run_as_root)

extra_ok_codes = extra_ok_codes or [] if returncode and returncode not in extra_ok_codes: diff -ruN neutron-bak/cmd/ipset_cleanup.py neutron/cmd/ipset_cleanup.py --- neutron-bak/cmd/ipset_cleanup.py 2022-02-16 15:18:00.727786180 +0800 +++ neutron/cmd/ipset_cleanup.py 2021-07-07 15:00:03.000000000 +0800 @@ -38,7 +38,8 @@ def remove_iptables_reference(ipset): # Remove any iptables reference to this IPset cmd = ['iptables-save'] if 'IPv4' in ipset else ['ip6tables-save'] - iptables_save = utils.execute(cmd, run_as_root=True) + #iptables_save = utils.execute(cmd, run_as_root=True) + iptables_save = utils.execute(cmd, run_as_root=True, privsep_exec=True)

if ipset in iptables_save: cmd = ['iptables'] if 'IPv4' in ipset else ['ip6tables'] @@ -50,7 +51,8 @@ params = rule.split() params[0] = '-D' try: - utils.execute(cmd + params, run_as_root=True) + #utils.execute(cmd + params, run_as_root=True) + utils.execute(cmd + params, run_as_root=True, privsep_exec=True) except Exception: LOG.exception('Error, unable to remove iptables rule ' 'for IPset: %s', ipset) @@ -65,7 +67,8 @@ LOG.info('Destroying IPset: %s', ipset) cmd = ['ipset', 'destroy', ipset] try: - utils.execute(cmd, run_as_root=True) + #utils.execute(cmd, run_as_root=True) + utils.execute(cmd, run_as_root=True, privsep_exec=True) except Exception: LOG.exception('Error, unable to destroy IPset: %s', ipset)

@@ -75,7 +78,8 @@ LOG.info('Destroying IPsets with prefix: %s', conf.prefix)

cmd = ['ipset', '-L', '-n'] - ipsets = utils.execute(cmd, run_as_root=True) + #ipsets = utils.execute(cmd, run_as_root=True) + ipsets = utils.execute(cmd, run_as_root=True, privsep_exec=True) for ipset in ipsets.split('\n'): if conf.allsets or ipset.startswith(conf.prefix): destroy_ipset(conf, ipset) diff -ruN neutron-bak/privileged/agent/linux/utils.py neutron/privileged/agent/linux/utils.py --- neutron-bak/privileged/agent/linux/utils.py 1970-01-01 08:00:00.000000000 +0800 +++ neutron/privileged/agent/linux/utils.py 2021-07-07 14:58:21.000000000 +0800 @@ -0,0 +1,82 @@ +# Copyright 2020 Red Hat, Inc. +# +# Licensed under the Apache License, Version 2.0 (the 'License'); you may +# not use this file except in compliance with the License. You may obtain +# a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an 'AS IS' BASIS, WITHOUT +# WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the +# License for the specific language governing permissions and limitations +# under the License. + +import os +import re + +from eventlet.green import subprocess +from neutron_lib.utils import helpers +from oslo_concurrency import processutils +from oslo_utils import fileutils + +from neutron import privileged + +

+NETSTAT_PIDS_REGEX = re.compile(r'.* (?P + + +@privileged.default.entrypoint +def find_listen_pids_namespace(namespace): + return _find_listen_pids_namespace(namespace) + + +def _find_listen_pids_namespace(namespace): + '''Retrieve a list of pids of listening processes within the given netns + This method is implemented separately to allow unit testing. + ''' + pids = set() + cmd = ['ip', 'netns', 'exec', namespace, 'netstat', '-nlp'] + output = processutils.execute(*cmd) + for line in output[0].splitlines(): + m = NETSTAT_PIDS_REGEX.match(line) + if m: + pids.add(m.group('pid')) + return list(pids) + + +@privileged.default.entrypoint +def delete_if_exists(path, remove=os.unlink): + fileutils.delete_if_exists(path, remove=remove) + + +@privileged.default.entrypoint +def execute_process(cmd, _process_input, addl_env): + obj, cmd = _create_process(cmd, addl_env=addl_env) + _stdout, _stderr = obj.communicate(_process_input) + returncode = obj.returncode + obj.stdin.close() + _stdout = helpers.safe_decode_utf8(_stdout) + _stderr = helpers.safe_decode_utf8(_stderr) + return _stdout, _stderr, returncode + + +def _addl_env_args(addl_env): + '''Build arguments for adding additional environment vars with env''' + + # NOTE (twilson) If using rootwrap, an EnvFilter should be set up for the + # command instead of a CommandFilter. + if addl_env is None: + return [] + return ['env'] + ['%s=%s' % pair for pair in addl_env.items()] + + +def _create_process(cmd, addl_env=None): + '''Create a process object for the given command. + The return value will be a tuple of the process object and the + list of command arguments used to create it. + ''' + cmd = list(map(str, _addl_env_args(addl_env) + list(cmd))) + obj = subprocess.Popen(cmd, shell=False, stdin=subprocess.PIPE, + stdout=subprocess.PIPE, stderr=subprocess.PIPE) + return obj, cmd |

B1.1

|

--- neutron-bak/plugins/ml2/drivers/openvswitch/agent/ovs_neutron_agent.py 2022-08-02 17:02:51.213224245 +0800 +++ neutron/plugins/ml2/drivers/openvswitch/agent/ovs_neutron_agent.py 2022-08-02 17:02:09.181883012 +0800 @@ -161,8 +161,8 @@ self.enable_distributed_routing = agent_conf.enable_distributed_routing self.arp_responder_enabled = agent_conf.arp_responder and self.l2_pop

- host = self.conf.host - self.agent_id = 'ovs-agent-%s' % host + self.host = self.conf.host + self.agent_id = 'ovs-agent-%s' % self.host

self.enable_tunneling = bool(self.tunnel_types)

@@ -245,7 +245,7 @@ self.phys_ofports, self.patch_int_ofport, self.patch_tun_ofport, - host, + self.host, self.enable_tunneling, self.enable_distributed_routing, self.arp_responder_enabled) @@ -289,7 +289,7 @@ # or which are used by specific extensions. self.agent_state = { 'binary': 'neutron-openvswitch-agent', - 'host': host, + 'host': self.host, 'topic': n_const.L2_AGENT_TOPIC, 'configurations': {'bridge_mappings': self.bridge_mappings, c_const.RP_BANDWIDTHS: self.rp_bandwidths, @@ -1671,6 +1671,7 @@ skipped_devices = [] need_binding_devices = [] binding_no_activated_devices = set() + migrating_devices = set() agent_restarted = self.iter_num == 0 devices_details_list = ( self.plugin_rpc.get_devices_details_list_and_failed_devices( @@ -1696,6 +1697,12 @@ skipped_devices.append(device) continue

+ migrating_to = details.get('migrating_to') + if migrating_to and migrating_to != self.host: + LOG.info('Port %(device)s is being migrated to host %(host)s.', + {'device': device, 'host': migrating_to}) + migrating_devices.add(device) + if 'port_id' in details: LOG.info('Port %(device)s updated. Details: %(details)s', {'device': device, 'details': details}) @@ -1729,7 +1736,7 @@ if (port and port.ofport != -1): self.port_dead(port) return (skipped_devices, binding_no_activated_devices, - need_binding_devices, failed_devices) + need_binding_devices, failed_devices, migrating_devices)

def _update_port_network(self, port_id, network_id): self._clean_network_ports(port_id) @@ -1821,10 +1828,12 @@ need_binding_devices = [] skipped_devices = set() binding_no_activated_devices = set() + migrating_devices = set() start = time.time() if devices_added_updated: (skipped_devices, binding_no_activated_devices, - need_binding_devices, failed_devices['added']) = ( + need_binding_devices, failed_devices['added'], + migrating_devices) = ( self.treat_devices_added_or_updated( devices_added_updated, provisioning_needed)) LOG.debug('process_network_ports - iteration:%(iter_num)d - ' @@ -1847,7 +1856,7 @@ # TODO(salv-orlando): Optimize avoiding applying filters # unnecessarily, (eg: when there are no IP address changes) added_ports = (port_info.get('added', set()) - skipped_devices - - binding_no_activated_devices) + binding_no_activated_devices - migrating_devices) self._add_port_tag_info(need_binding_devices) self.sg_agent.setup_port_filters(added_ports, port_info.get('updated', set())) |

B1.2

|

--- neutron-bak/conf/common.py 2022-08-02 17:07:18.239265163 +0800 +++ neutron/conf/common.py 2021-09-08 17:08:59.000000000 +0800 @@ -166,6 +166,24 @@ help=_('Type of the nova endpoint to use. This endpoint will' ' be looked up in the keystone catalog and should be' ' one of public, internal or admin.')), + cfg.BoolOpt('live_migration_events', default=True, + help=_('When this option is enabled, during the live ' + 'migration, the OVS agent will only send the ' + ''vif-plugged-event' when the destination host ' + 'interface is bound. This option also disables any ' + 'other agent (like DHCP) to send to Nova this event ' + 'when the port is provisioned.' + 'This option can be enabled if Nova patch ' + 'https://review.opendev.org/c/openstack/nova/+/767368 ' + 'is in place.' + 'This option is temporary and will be removed in Y and ' + 'the behavior will be 'True'.'), + deprecated_for_removal=True, + deprecated_reason=( + 'In Y the Nova patch ' + 'https://review.opendev.org/c/openstack/nova/+/767368 ' + 'will be in the code even when running a Nova server in ' + 'X.')), ] |

B1.3

|

--- neutron-bak/agent/rpc.py 2021-08-25 15:29:11.000000000 +0800 +++ neutron/agent/rpc.py 2021-09-15 16:34:09.000000000 +0800 @@ -25,8 +25,10 @@ from neutron_lib import constants from neutron_lib.plugins import utils from neutron_lib import rpc as lib_rpc +from oslo_config import cfg from oslo_log import log as logging import oslo_messaging +from oslo_serialization import jsonutils from oslo_utils import uuidutils

from neutron.agent import resource_cache @@ -323,8 +325,10 @@ binding = utils.get_port_binding_by_status_and_host( port_obj.bindings, constants.ACTIVE, raise_if_not_found=True, port_id=port_obj.id) - if (port_obj.device_owner.startswith( - constants.DEVICE_OWNER_COMPUTE_PREFIX) and + migrating_to = migrating_to_host(port_obj.bindings) + if (not (migrating_to and cfg.CONF.nova.live_migration_events) and + port_obj.device_owner.startswith( + constants.DEVICE_OWNER_COMPUTE_PREFIX) and binding[pb_ext.HOST] != host): LOG.debug('Device %s has no active binding in this host', port_obj) @@ -357,7 +361,8 @@ 'qos_policy_id': port_obj.qos_policy_id, 'network_qos_policy_id': net_qos_policy_id, 'profile': binding.profile, - 'security_groups': list(port_obj.security_group_ids) + 'security_groups': list(port_obj.security_group_ids), + 'migrating_to': migrating_to, } LOG.debug('Returning: %s', entry) return entry @@ -365,3 +370,40 @@ def get_devices_details_list(self, context, devices, agent_id, host=None): return [self.get_device_details(context, device, agent_id, host) for device in devices] + +# TODO(ralonsoh): move this method to neutron_lib.plugins.utils +def migrating_to_host(bindings, host=None): + '''Return the host the port is being migrated. + + If the host is passed, the port binding profile with the 'migrating_to', + that contains the host the port is being migrated, is compared to this + value. If no value is passed, this method will return if the port is + being migrated ('migrating_to' is present in any port binding profile). + + The function returns None or the matching host. + ''' + #LOG.info('LiveDebug: enter migrating_to_host 001') + for binding in (binding for binding in bindings if + binding[pb_ext.STATUS] == constants.ACTIVE): + profile = binding.get('profile') + if not profile: + continue + ''' + profile = (jsonutils.loads(profile) if isinstance(profile, str) else + profile) + migrating_to = profile.get('migrating_to') + ''' + # add by michael + if isinstance(profile, str): + migrating_to = jsonutils.loads(profile).get('migrating_to') + #LOG.info('LiveDebug: migrating_to_host 001 migrating_to: %s', migrating_to) + else: + migrating_to = profile.get('migrating_to') + #LOG.info('LiveDebug: migrating_to_host 002 migrating_to: %s', migrating_to) + + if migrating_to: + if not host: # Just know if the port is being migrated. + return migrating_to + if migrating_to == host: + return migrating_to + return None |

B1.4

|

--- neutron-bak/db/provisioning_blocks.py 2021-08-25 15:43:47.000000000 +0800 +++ neutron/db/provisioning_blocks.py 2021-09-03 09:32:41.000000000 +0800 @@ -137,8 +137,7 @@ context, standard_attr_id=standard_attr_id): LOG.debug('Provisioning complete for %(otype)s %(oid)s triggered by ' 'entity %(entity)s.', log_dict) - registry.notify(object_type, PROVISIONING_COMPLETE, - 'neutron.db.provisioning_blocks', + registry.notify(object_type, PROVISIONING_COMPLETE, entity, context=context, object_id=object_id)

|

B1.5

|

--- neutron-bak/notifiers/nova.py 2021-08-25 16:02:33.000000000 +0800 +++ neutron/notifiers/nova.py 2021-09-03 09:32:41.000000000 +0800 @@ -13,6 +13,8 @@ # License for the specific language governing permissions and limitations # under the License.

+import contextlib + from keystoneauth1 import loading as ks_loading from neutron_lib.callbacks import events from neutron_lib.callbacks import registry @@ -66,6 +68,16 @@ if ext.name == 'server_external_events'] self.batch_notifier = batch_notifier.BatchNotifier( cfg.CONF.send_events_interval, self.send_events) + self._enabled = True + + @contextlib.contextmanager + def context_enabled(self, enabled): + stored_enabled = self._enabled + try: + self._enabled = enabled + yield + finally: + self._enabled = stored_enabled

def _get_nova_client(self): global_id = common_context.generate_request_id() @@ -163,6 +175,10 @@ return self._get_network_changed_event(port)

def _can_notify(self, port): + if not self._enabled: + LOG.debug('Nova notifier disabled') + return False + if not port.id: LOG.warning('Port ID not set! Nova will not be notified of ' 'port status change.') |

B1.6

|

--- nova-bak/compute/manager.py 2022-08-02 16:27:45.943428128 +0800 +++ nova/compute/manager.py 2021-09-03 09:35:24.529858458 +0800 @@ -6637,12 +6637,12 @@ LOG.error(msg, msg_args)

@staticmethod - def _get_neutron_events_for_live_migration(instance): + def _get_neutron_events_for_live_migration(instance, migration): # We don't generate events if CONF.vif_plugging_timeout=0 # meaning that the operator disabled using them. - if CONF.vif_plugging_timeout and utils.is_neutron(): - return [('network-vif-plugged', vif['id']) - for vif in instance.get_network_info()] + if CONF.vif_plugging_timeout: + return (instance.get_network_info() + .get_live_migration_plug_time_events()) else: return []

@@ -6695,7 +6695,8 @@ ''' pass

- events = self._get_neutron_events_for_live_migration(instance) + events = self._get_neutron_events_for_live_migration( + instance, migration) try: if ('block_migration' in migrate_data and migrate_data.block_migration): |

B1.7

|

--- nova-bak/network/model.py 2022-08-02 16:27:47.490437859 +0800 +++ nova/network/model.py 2021-09-03 09:35:24.532858440 +0800 @@ -469,6 +469,14 @@ return (self.is_hybrid_plug_enabled() and not migration.is_same_host())

+ @property + def has_live_migration_plug_time_event(self): + '''Returns whether this VIF's network-vif-plugged external event will + be sent by Neutron at 'plugtime' - in other words, as soon as neutron + completes configuring the network backend. + ''' + return self.is_hybrid_plug_enabled() + def is_hybrid_plug_enabled(self): return self['details'].get(VIF_DETAILS_OVS_HYBRID_PLUG, False)

@@ -527,20 +535,26 @@ return jsonutils.dumps(self)

def get_bind_time_events(self, migration): - '''Returns whether any of our VIFs have 'bind-time' events. See - has_bind_time_event() docstring for more details. + '''Returns a list of external events for any VIFs that have + 'bind-time' events during cold migration. ''' return [('network-vif-plugged', vif['id']) for vif in self if vif.has_bind_time_event(migration)]

+ def get_live_migration_plug_time_events(self): + '''Returns a list of external events for any VIFs that have + 'plug-time' events during live migration. + ''' + return [('network-vif-plugged', vif['id']) + for vif in self if vif.has_live_migration_plug_time_event] + def get_plug_time_events(self, migration): - '''Complementary to get_bind_time_events(), any event that does not - fall in that category is a plug-time event. + '''Returns a list of external events for any VIFs that have + 'plug-time' events during cold migration. ''' return [('network-vif-plugged', vif['id']) for vif in self if not vif.has_bind_time_event(migration)]

- class NetworkInfoAsyncWrapper(NetworkInfo): '''Wrapper around NetworkInfo that allows retrieving NetworkInfo in an async manner. |

C1.1

|

--- linux-3.10.0-1062.18.1.el7.orig/net/ipv4/udp_offload.c 2020-02-12 21:45:22.000000000 +0800 +++ linux-3.10.0-1062.18.1.el7/net/ipv4/udp_offload.c 2022-08-17 15:56:27.540557289 +0800 @@ -261,7 +261,7 @@ struct sk_buff **udp_gro_receive(struct struct sock *sk;

if (NAPI_GRO_CB(skb)->encap_mark || - (skb->ip_summed != CHECKSUM_PARTIAL && + (uh->check && skb->ip_summed != CHECKSUM_PARTIAL && NAPI_GRO_CB(skb)->csum_cnt == 0 && !NAPI_GRO_CB(skb)->csum_valid)) goto out; |